We explore deep learning-basedAmazon

In a slight change of pace from our recent explorations of Internet of Things platforms, we started digging into Amazon’s recently announced Rekognition service. Rekognition is a powerful image processing service capable of detecting faces, people, objects, and subtle photo elements such as scene and sentiment. Rekognition analyzes images it receives and returns various attributes it thinks it has detected along with corresponding degrees of certainty. It also provides the option to store images in collections, compare photos, and even perform searches within photos using its image recognition capabilities. Users are then free to use the service and the data it provides for their own goals, whether it be searching through personal photos for a particular moment or even creating an identity verification system.

We decided to test Amazon Rekognition by making an automated, motion-activated, identity verification system that can run on a Raspberry Pi. This would complement some of our previous beacon work to track user location between offices. When someone walks into a room, our system should be able to detect motion, snap a picture of the individual, and compare the picture to reference pictures of known individuals.

What We Did

Our first goal was to gain some basic familiarity with Amazon Rekognition, trying out its face-detection capabilities on various photos. We decided to start by using the AWS CLI. Once installed, using Rekognition was simple and intuitive. All we needed to do was upload photos to an AWS S3 bucket and then reference the bucket and photo within CLI commands. (Read Amazon’s guide on this process.) It wasn’t long before we were able to use Rekognition to get some information about our photos, such as “Conference Room” and “Projection Screen” (with confidence levels of 97.7% and 82.4%, respectively) from a picture of us at the HopHacks hackathon in a conference room, with a projection screen behind us.

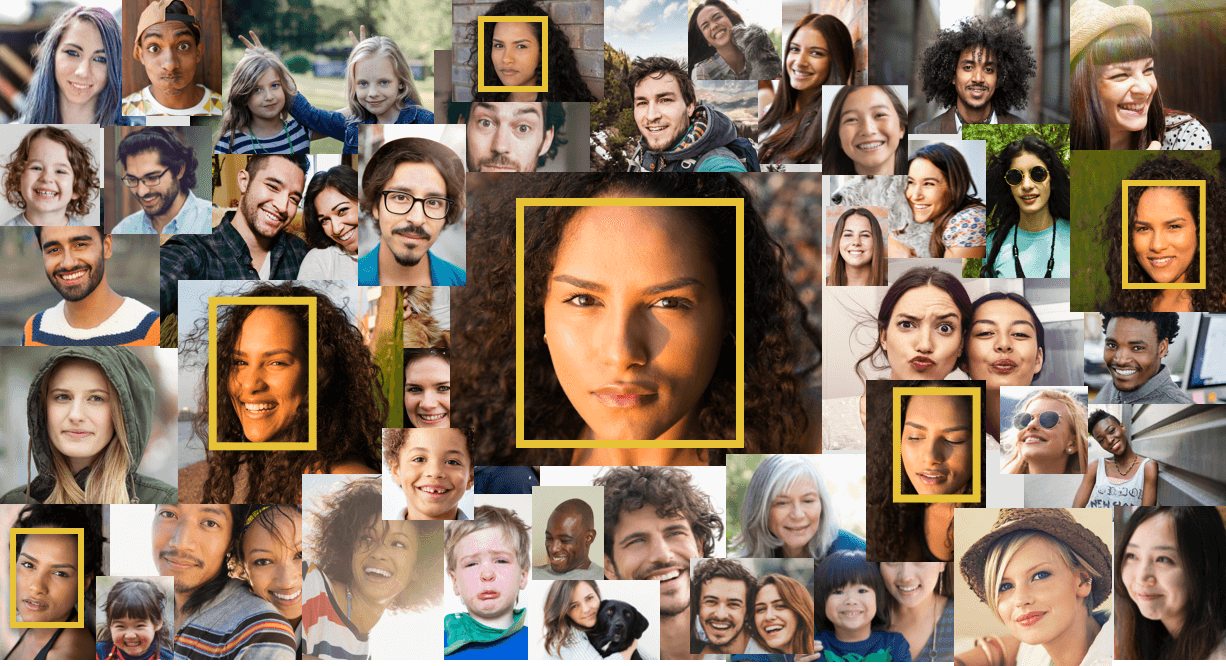

Detecting familiar faces is the most important feature for our goal of creating an identity verification system. Fortunately, we found that it was easy to detect faces in the test photo (above) and compare them to reference images of our team, in order to see if there were any recognized faces. Given that we want to create a simple identity verification system, it is important for us to have an idea of how Rekognition’s performance is affected by variations in the input photos such as image quality and presentation of the subject. We don’t need to be able to pick out a familiar face from a crowd of dozens of people, but we do need to be able to accurately recognize familiar individuals even when they are not directly in front of or facing the camera.

To get some data on Rekognition’s limits, we gathered a set of 2 reference photos and 6 test photos in various situations like grocery store and sitting in a window. We then created a shell script that automated our testing process. First, we uploaded our 2 reference photos into a Collection, which is how users can group photos within Rekognition. Copies of our test photos were created at 100%, 90%, etc. all the way down to 10% quality, and all 60 test photos were then uploaded to our S3 bucket and compared against both reference photos. The script then extracted useful information from the data returned by Rekognition and structured that data into JSON format.

Findings

The results were interesting and we learned a few key concepts. Unsurprisingly, with our test photos ranging all the way down to double-digit resolutions (toeing Rekognition’s impressive minimum of 80×80 resolution), many photos were simply too low-resolution for Rekognition to detect a match. However, something as simple as a mid-laugh smile was able to make a significant difference in Rekognition’s ability to detect similarities. One of our test photos contained a smiling subject, and Amazon Rekognition was able to recognize the subject down to 50% original test photo quality with 93% confidence using the reference photo in which the subject was also smiling. However when comparing the test photos of the subject laughing to the reference photo of the subject with a serious expression, Rekognition was unable to recognize the subject at any test photo quality.

Similarly, when the subject appeared in a group with a partially obscured face, Rekognition was unable to recognize the subject using either reference picture, at any test picture quality. The transition from confident identification to identification failure was also rather abrupt, generally going from identifying a subject with 80-90% confidence at one test photo quality to outright failure at the next lowest test photo percentage.

Next Steps

Using our test data, we determined that the photos used in our identity verification system should be a minimum of roughly 450×300 (or 300×450) resolution, as this is approximately the resolution of the lowest quality test photos in which Amazon Rekognition was still able to recognize the subject. Subjects should ideally also face the camera, stand close, and have their face unobscured. On the reference picture side, based on the results of the smiling test picture, it would be useful to have a few pictures of each known individual, with some differing expressions or angles.

With this in mind, we have begun development on the next stage of our project: the camera. We have ordered an element14 Raspberry Pi Camera V2 and will be setting it up to work with our Raspberry Pi and to be triggered via motion sensor.